Success Stories

We always put the needs of our customers first

Because we know what matters most: Solving your problems thoroughly and permanently

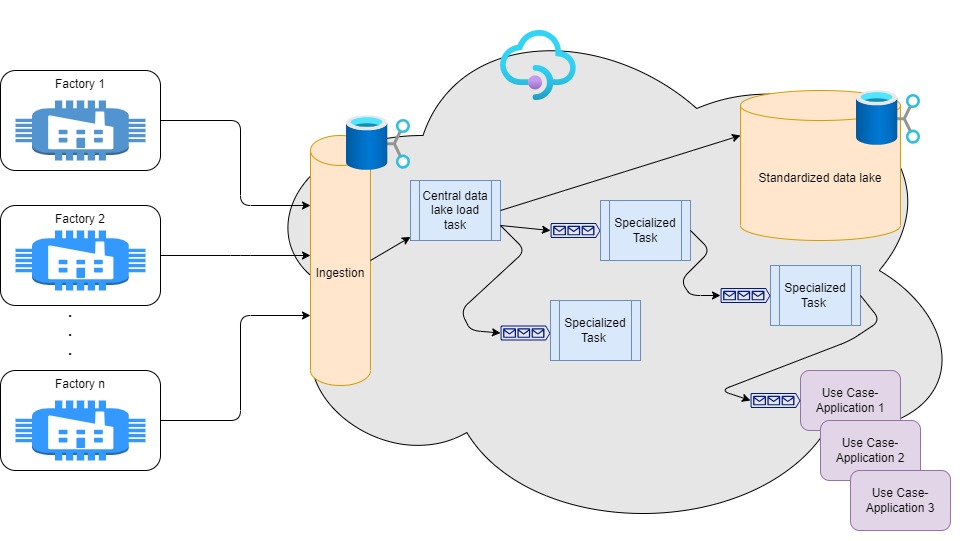

Manufacturing Analytical Cloud Datalake

A prominent manufacturing company was facing a challenge in identifying scrap parts faster and with less hard-to-train personnel. We provided a solution by deploying our deep expertise in cloud technologies and Microsoft Azure to build a sophisticated data lake for the client that can ingest, store and process vast quantities of manufacturing data, esp. images from Computer Vision, in real-time. This enabled the client’s AI experts to train models with cross-site image data and deploy them back to the production machines from the cloud.

By applying technologies like infrastructure-as-code and cloud resources like Queues and Kubernetes (AKS), we created a fast and responsive, yet cost-efficient, specialized cloud datalake. It also featured a fine-grained role-concept, seamlessly integrated with the company’s Active Directory. This use case illustrates our strength in leveraging advanced technologies to solve real-world cloud challenges and drive tangible business value.

Context

Manufacturing, Machine Parts

Skills

Cloud Engineering, Mass Data Processing, Real-time systems design

Scope

12-month initial development period with ongoing data source extensions

Technologies

Azure, Queues, Storage Accounts, Federated Identities, Python

Telemetry Backbone (TBB)

In the automotive industry, the share of digital additional services in the value chain is growing rapidly. An essential basis for the creation of such services is high-frequency telemetry data from vehicles and vehicle components. Within a project for a global automotive supplier, we developed the Telemetry Backbone, a data streaming architecture that turns Big Data into Fast Data. The sensor data is being streamed and stored in real-time and is thus immediately available to our customer. Moreover, the Telemetry Backbone acts as a data processing pipeline in which the raw data can be enriched and marked with quality indicators. This enables improved data quality and depth of information without losing the original granularity.

But what exactly are the benefits for our customer? The Telemetry Backbone has proven to be an enormous asset in three areas in particular: For Data Scientists, it enables the implementation of machine learning models to predict the durability and maintenance intervals of the tyre (predictive maintenance). In quality management, the processed data can be used to trace the causes of a premature quality defect and also in the field of product and cost optimization, TBB plays an important role: Here, deploying AI, the data can be used to simulate possible effects of modifications regarding material composition and processing.

Context

Production, Quality Management, Predictive Maintenance

Skills

Cloud Scaling, Data Engineering,

Data Science, Infrastructure

Scope

One year development period

with regular extensions

Technologies

Java, Kafka, Cassandra, AWS, Kubernetes

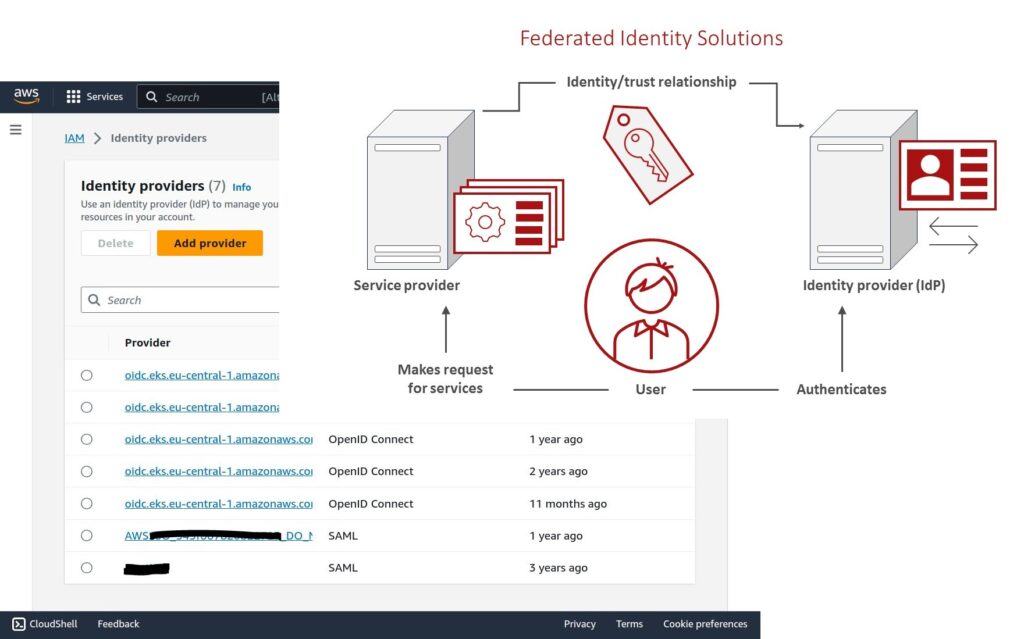

AWS Identity Access Management

A large manufacturing client struggled with security and configuration management of multiple legacy cloud applications. We consulted on tackling the challenge of roles and permissions by strictly enforcing the application of temporary credentials instead of locally managed ones like keys / secrets. On top, Identity Federation enables our client to use the existing identity providers like Active Directory without the additional burden of managing dedicated users and credentials.

To implement this, we provided a conclusive and customer specific checklist and documentations, held workshops and trained admin personell. On the cloud, we utilized IAM roles and Workload Identities to standardize and automate the issuance and consumption of short lived secure tokens. With the use of Federated Identities in combination with Cognito, we managed a seamless integration of the existing user pool and their roles and permissions on cloud resources.

Context

Cloud Infrastructure and Security

Skills

Federated Identity, IAM Management

Scope

3-month setup period with ongoing support / admin consulting

Technologies

AWS IAM, Roles, Permissions, Policies, Workload Identities

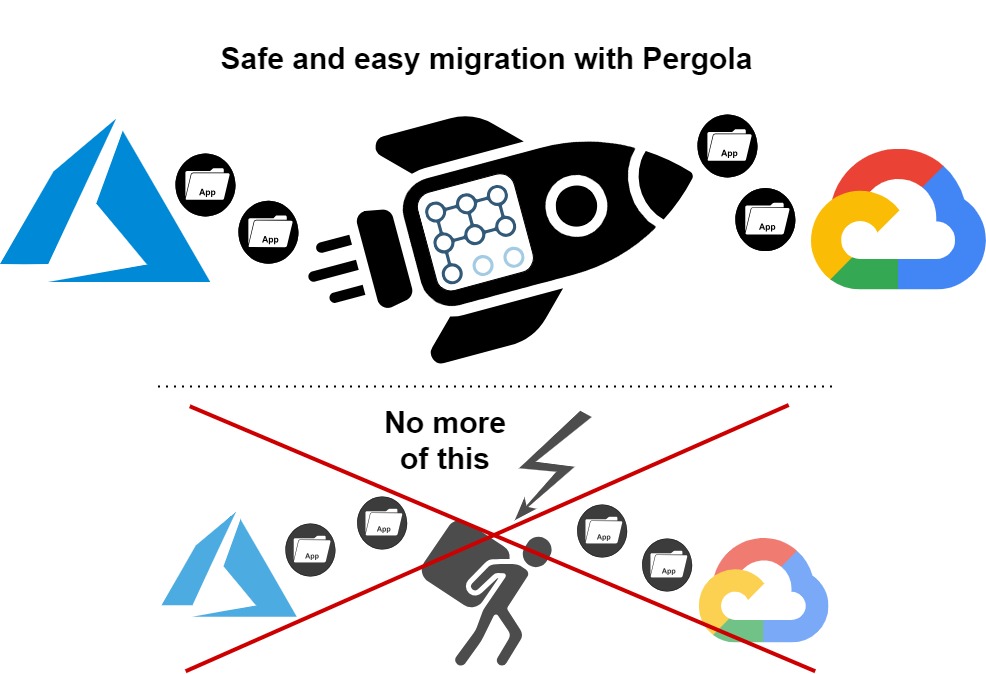

Azure to GCP Migration

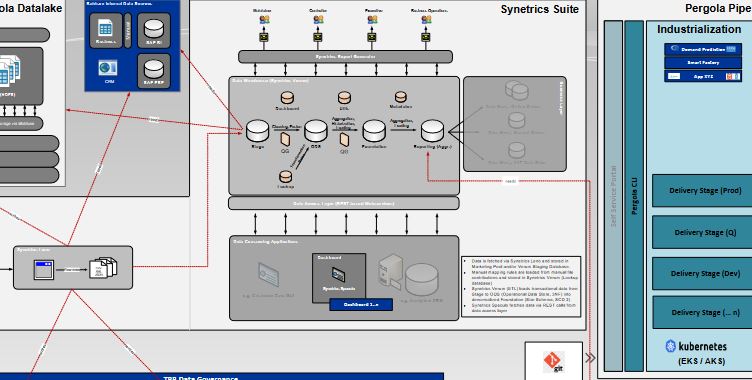

A client from the food processing industry had built various containerized data-driven applications running in Microsoft Azure. Wanting to then migrate those applications into a private Google Cloud Platform environment to use exclusive features like Big Query, the company was confronted with obstacles. In various technical planning sessions, we created a simplified cloud infrastructure plan for our client based on Kubernetes and Pergola as well as a detailed technical migration plan.

Our engineers first set up the new GCP infrastructure and tested various use cases, leveraging the staging concept of Pergola, before migrating productive environments. This reduced the challenges involved mainly with data migration and network accessibilty issues while at the same time training the application owners in the more sophisticated CICD and software development lifecycle processes that the new platform enables.

Context

Cloud Infrastructure,

Food Manufacturing

Skills

Cloud Platform Design,

System Migration Planning

Scope

2.5-month setup and planning period with various inidivual migrations over a period of 4 months

Technologies

Kubernetes, Pergola, Azure Cloud

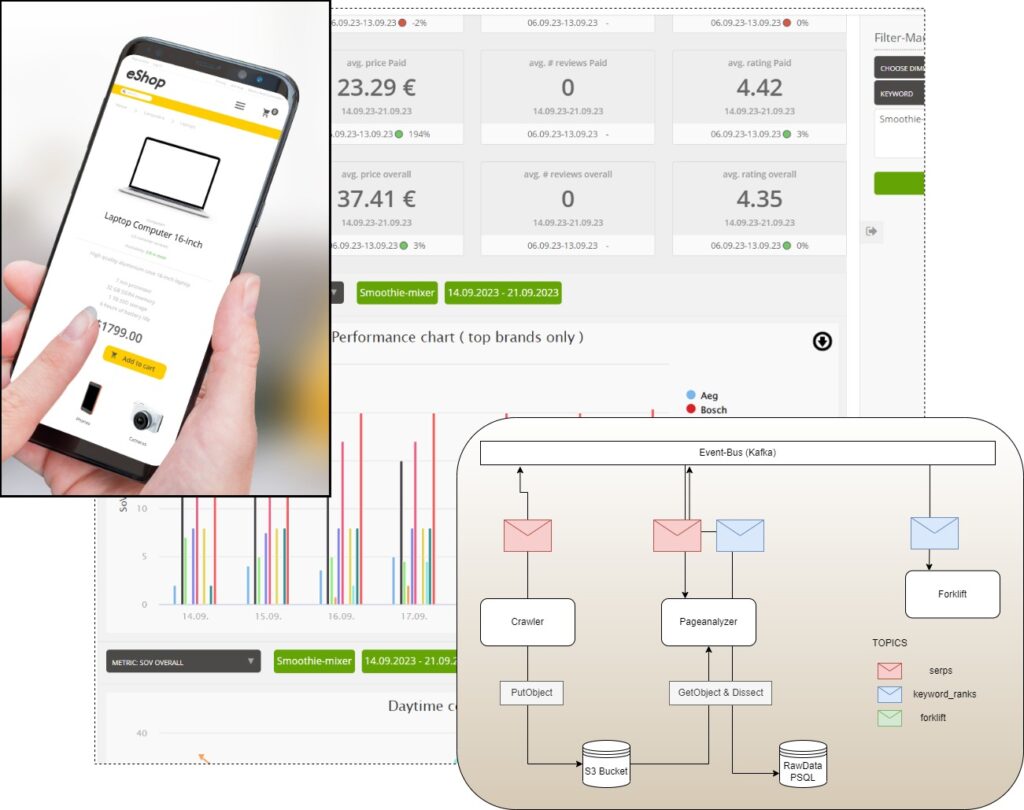

Marketplace Lense

Intensified by the corona pandemic, sales in B2C e-commerce in Germany have increased rapidly over the last few years. Just as with traditional search engines, potential customers usually only click the first few results in their product search. All offers from the second page onwards remain virtually invisible. Thus, product rankings are a critical success factor for online retailers. The higher the ranking, the higher the turnover. One of our major marketing clients helps companies optimize the visibility of their products on e-commerce platforms. However, this is not possible without the corresponding data, which brings us into play: We take care of the detailed detection and visualization of the product rankings, creating the best possible foundation for successful marketing strategies.

We analyze predefined product keyword sets on local and global top-selling e-commerce platforms multiple times a day. Evaluated and visually processed, KPIs are made available our customer and his clients in a personalized dashboard with individual user management. With reports on the client’s share of voice, his visibility compared to major competitors, or his performance depending on the time of day and day of the week, we provide trends for entire companies or individual products – trends with enormous benefits for online retailers of all sizes.

Context

Online Retailers, Marketeers,

SEO Experts

Skills

Software Development, Web Design,

Back- and Frontend,

Cloud Infrastructure

Scope

One year development period with regular enhancements and adjustments

Technologies

Python, Java, Javascript, Terraform

Online/Offline Sales BI and Reporting

With the rise of online marketing and sales vs. traditional agency distribution, a global insurance agency needed to update its internal business intelligence process to account for both channels correctly. We derived a set of technically feasible requirements with the client that would allow us to bring all online and offline data together in one single dataset and even derive online attribution to offline sales for a set of KPIs, measuring the effectiveness of marketing campaigns on traditional offline sales.

Our internal open-source-based data warehouse approaches were used to process data from pre-existing databases as well as mass data from online tracking. Due to regulatory issues, we were limited to onsite infrastructure instead of any cloud resources. We managed to use scalable virtual machine based setups on existing local hardware to cope with both the seasonality of campaigns and the increasing amount of tracked online interactions. Management board reports were provided via browser-based and email reporting.

Context

Sales, Insurance

Skills

Data Engineering, Domain Analysis,

Mass Data Processing

Scope

6-month initial development period with regular data source extensions

Technologies

Java, PostgresSQL, Ansible, Javascript

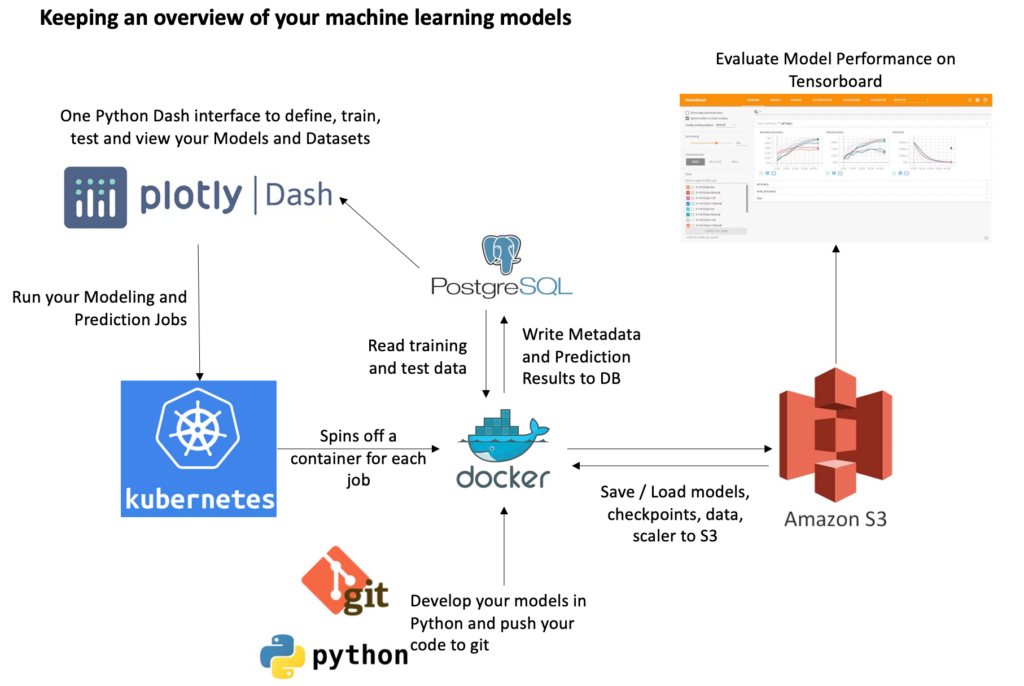

MLOps: Organise and Manage your Machine Learning Models

Everyone working in modeling and analytics knows it: Keeping track of the different models you train and evaluate performance systematically can make you feel a bit lost. It takes at least the same amount of time as defining and coding your actual model. What parameters did I use again? Which model file did I want to scrap? We helped a client from the automotive industry to develop a systematic solution to these questions. Building on a suite of open source software products, we found an easy way to keep track of machine learning metadata, giving the experts more time to do the actual modeling and performance analysis.

With plotly Dash, we created an interface to define datasets and models with different parameters. The dashboard allows our client to start model training and prediction jobs. Because of Jenkins and Kubernetes load balancing, several jobs can run concurrently and in any desired environment (GPU acceleration, high RAM and CPU as per demand). Each job is spinned off in its own Docker container, ensuring a separate and clean environment without dependencies. Model parameter and metadata, job metadata and prediction results get stored in a Postgres database which is located in its own permanent docker container. Models, scalers and checkpoints are uploaded to an S3 bucket for later use or for the Tensorboard to display model performance. The only task left to the developer is model development in Python and code persistence in a git repository.

Context

Data Scientists, Data Engineers

Skills

Cloud Infrastructure,

Software Development

Scope

The stack was built within

two months of work with our client

Technologies

Kubernetes, Docker, Python 3, Dash, PostgresSQL, AWS, Tensorboard

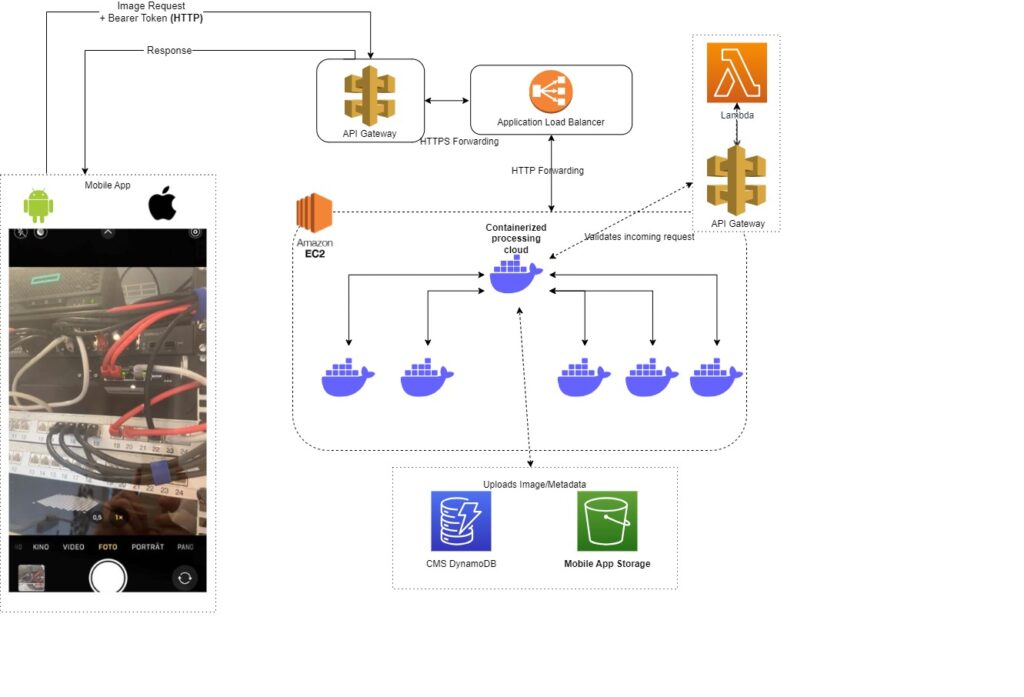

AWS Cloud Image Intake

A leading industrial enterprise experienced difficulties providing customer-taken product images back to the company’s private AWS cloud. Applying our in-depth knowledge of Amazon Web Services (AWS), we constructed a secure cloud infrastructure for the client that can accept and store all images while maintaining stringent security protocols to the outside world.

The use case was both broad – upload any image from anywhere anytime – and small-scale – only a few hundred of uploads per day. We relied on AWS Managed Services like API Gateway, Lambda Serverless etc. to provide a globally available and cost-efficient solution which could be scaled up in the future if necessary.

Context

Predictive Maintenance, Automotive

Skills

Network Security,

Cloud Service Design, FinOps

Scope

4-month development period

Technologies

Python, AWS Managed Services

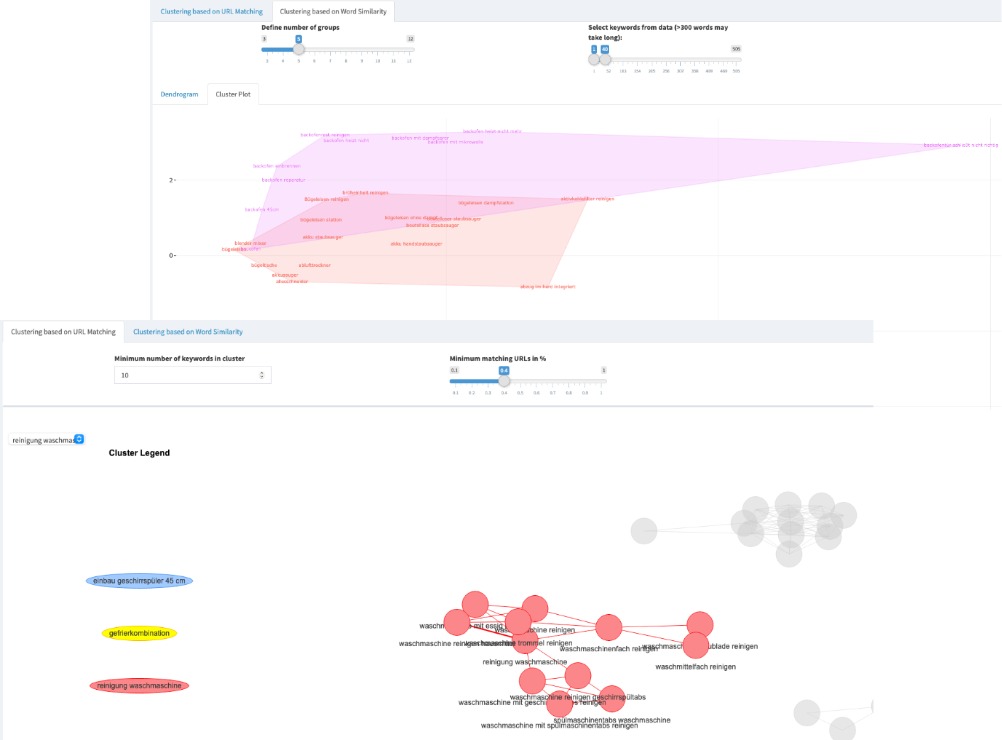

Search Engine Optimization with Keyword Clustering

One of our clients is a big marketing agency involved in Search Engine Optimization (SEO) for thousands of webpages. It requires a good portion of analytics to build these webpages so that they appear at the very top of Google search results (more traffic and clicks). We built an application that interactively visualises the interconnection of keywords. i.e. clusters, based on network analytics, natural language processing and Google search results. With this information, our client can set up webpages with more ease and the certainty that they will rank higher than competitor sites.

Our client provides us with a list of thousands of keywords for which we scrape Google search results. Once ready, our algorithm detects how keywords link to others based on network analytics. We can identify best connected and most cental keywords, the biggest cluster and how different keyword clusters or topics relate to each other. We also use natural language processing to enrich the analysis. The results can be viewed in an interactive R Shiny Dashboard and be downloaded to Excel.

Context

SEO Experts, Marketeers

Skills

Web Dashboard for visualisation and downloadable Excel files

Scope

Project developed over one year with

several iteration rounds

Technologies

Network Analytics, Natural Language Processing, R Shiny, Web Scraping